Certification of AI systems by 2025 – utopia or reality? 2/2

Paula Lopez

The first part of this blog post talked about the importance of AI/ML trustworthiness in the Artificial Intelligence Roadmap: a human-centric approach to AI in aviation. The roadmap’s publication last year clearly demonstrated how EASA aims to set the basis for the implementation of AI/ML-driven systems into aviation operations.

In this report, four building blocks of trustworthiness are identified and will be reviewed in this post.

1. AI trustworthiness analysis (including human-AI interface)

Potential AI systems must first comply with ethical principles. In particular, AI/ML applications shall encompass the seven gears of the EU ethical guidelines: accountability, technical robustness and safety, oversight, privacy and data governance, non-discrimination and fairness, transparency, societal and environmental well-being.

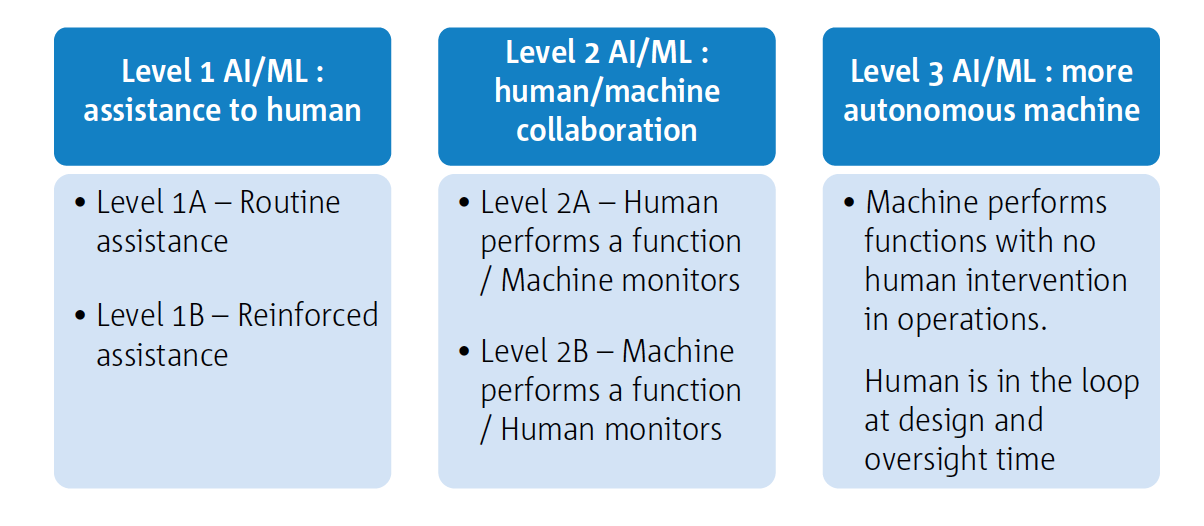

Under the ethical-conscious premise, three major automation levels are considered in the path to full automation. The first involves an assistance role that involves more routine-based tasks; the second involves a scenario where the human operator collaborates with the technology to support its tasks (developing appropriate HMI must be developed). The third scenario considers full autonomous systems, where human intervention is not required at operation but required at design and supervision level. Although a different automation scale has been developed for aviation, it follows a gradual progression similar to with autonomous driving, where higher levels of automation have been reached.

Source: Artificial Intelligence Roadmap, 2020, EASA

2. Learning the assurance concept

The traditional concept of assurance implies the fulfillment of a number of requirements in relation to the design and development of systems’ components. In the case of ML-based systems, this methodology needs to be revised in order to develop a novel framework for these tools and new means of compliance. While the framework is still in its early stages, we must consider our particular focuses: “the assurance processes should be shifted on the training/verification data sets’ correctness and completeness, on the identification and mitigation of biases, on the measurement of the accuracy and performance of an ML application, on the identification and the use of novel verification methods, etc. A brand new ‘design assurance’ paradigm is needed.“.

As largely discussed in this DataScience.aero blog, the importance is placed on data preparation tasks and the role of the training dataset as well as in monitoring and verifying system performance during operations.

3. Explainability of AI

Despite research being performed to advance the explainability of AI from a technical perspective, especially from groups such as High-Level Expert Group on Artificial Intelligence, explainability continues to elude AI/ML systems, especially for applications other than computer vision. Understanding, or interpreting (in human terms) why models provide a certain resolution to a given scenario is still a difficult concept to break down into concrete terms. Cooperation between humans and machines will require more trust and transparency, especially in decision-making processes.

4. AI safety risk mitigation

AI safety risk mitigation assumes AI will not be fully explainable in the future; as a result, a certain level of human participation must be involved in the process. Different human-machine collaboration roles might be possible: keeping humans in command, humans monitoring outcomes while having backup systems (safety net) or independent AI-agents serving as the monitoring agents, etc. The roadmap also considers a hybrid AI system where AI models are combined with rule-based approaches. In another light, perhaps specific solutions will need to be identified for each particular application based on its safety-critical level. The participation of the human factors and HMI experts plays a key role for the successful design, development and implementation of these systems.

Having established the ethical guidelines compliance and the trustworthiness of the AI technology, EASA acknowledges the significant challenges of this technology. These challenges include the need for EASA staff to adapt to AI/ML practices, involve themselves in AI projects and research, and support AI strategies. In other words, EASA and its staff need to participate more in the the development of AI technology in order to give an adequate response to industry needs. That said, EASA has started a number of internal organizational and regulatory changes and initiatives to achieve this goal in the short term. EASA has therefore elaborated a consolidated action plan to organize the collaboration among EASA staff, its stakeholders, relevant EU institutions and research entities to achieve its goals:

Source: Artificial Intelligence Roadmap, 2020, EASA

We’re excited to follow the success of this plan!

References: