Machine learning imitates how humans acquire knowledge through experience. However, humans can also transfer knowledge across different tasks. Let’s say you know how to play the guitar – how hard would it be for you to learn how to play the banjo? What about piano – how much further learning would you need?

This theory of building atop previous experience, rather than learning from scratch, is a hot topic in machine learning nowadays. This methodology is called transfer learning. As Andrew NG commented in an NIPS 2019 tutorial: “After supervised learning – Transfer learning will be the next driver of ML commercial success”.

What is “transfer learning”?

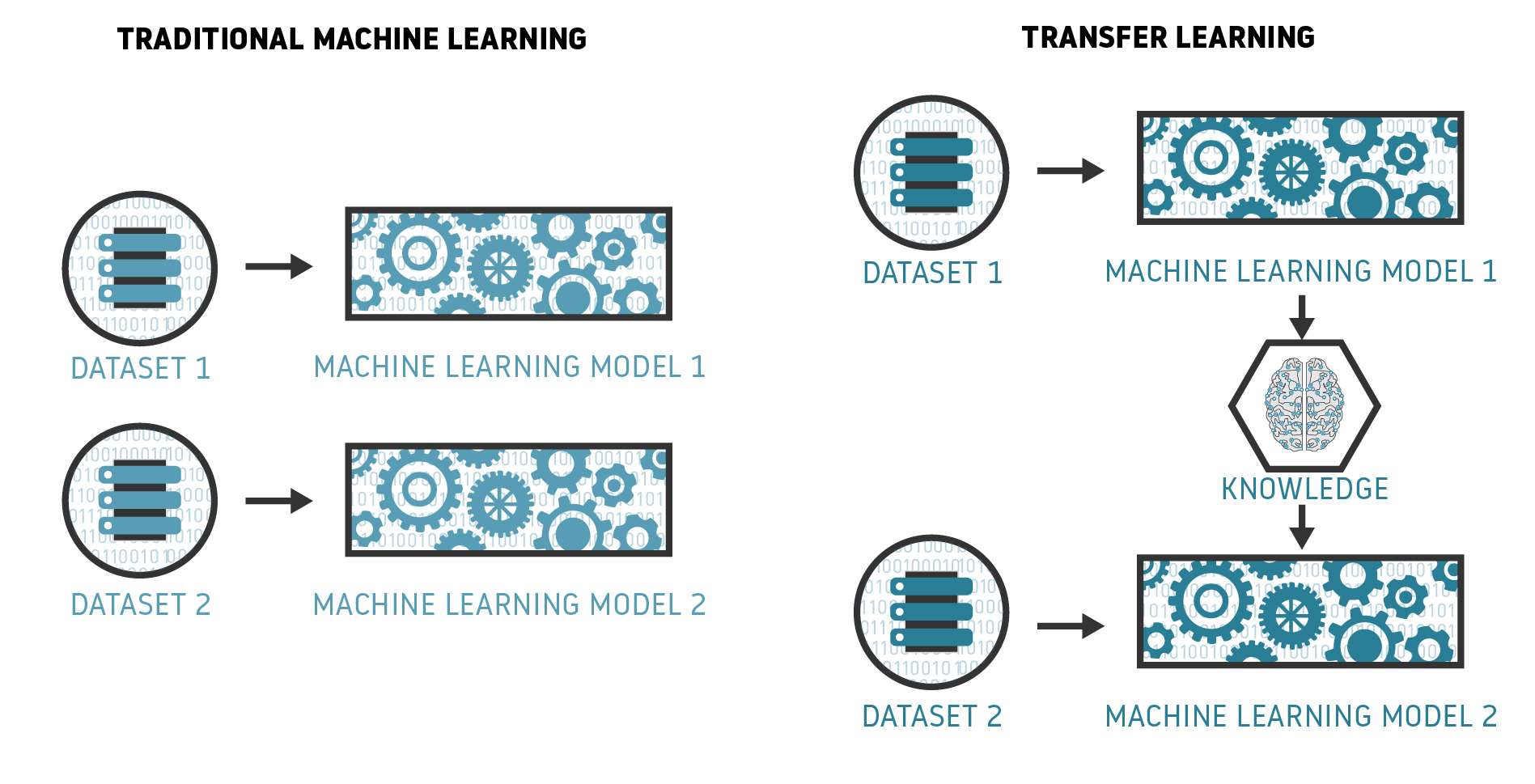

Transfer learning is an exciting concept which aims to redevelop the traditional idea that machine learning models need to be rebuilt from scratch, even when the new case study is just a features (domain variables) distribution change. With transfer learning, instead of having to train your model again and again, you could potentially use knowledge acquired for one task to solve related ones.

The key concept behind transfer learning in data science is deep learning models. They require A LOT of data, which, if your model is also supervised, means that you need a lot of labelled data. As everyone involved in a machine learning project knows, labelling data samples is very tedious and time consuming. This procedure will slow your model development team or even block the chance of success due to the lack of labelled training data. Another common problem with deep learning solutions is that, despite the high accuracy of state-of-the-art algorithms, they rely on very specific datasets and suffer vast losses of performance when new patterns and cases are introduced in real operation scenarios.

Transfer learning is not a new concept and actually dates back to the NIPS 1995 workshop Learning to Learn: Knowledge Consolidation and Transfer in Inductive Systems. In addition to being used to improve deep learning models, transfer learning is used in new methodologies for building and training machine learning models in general.

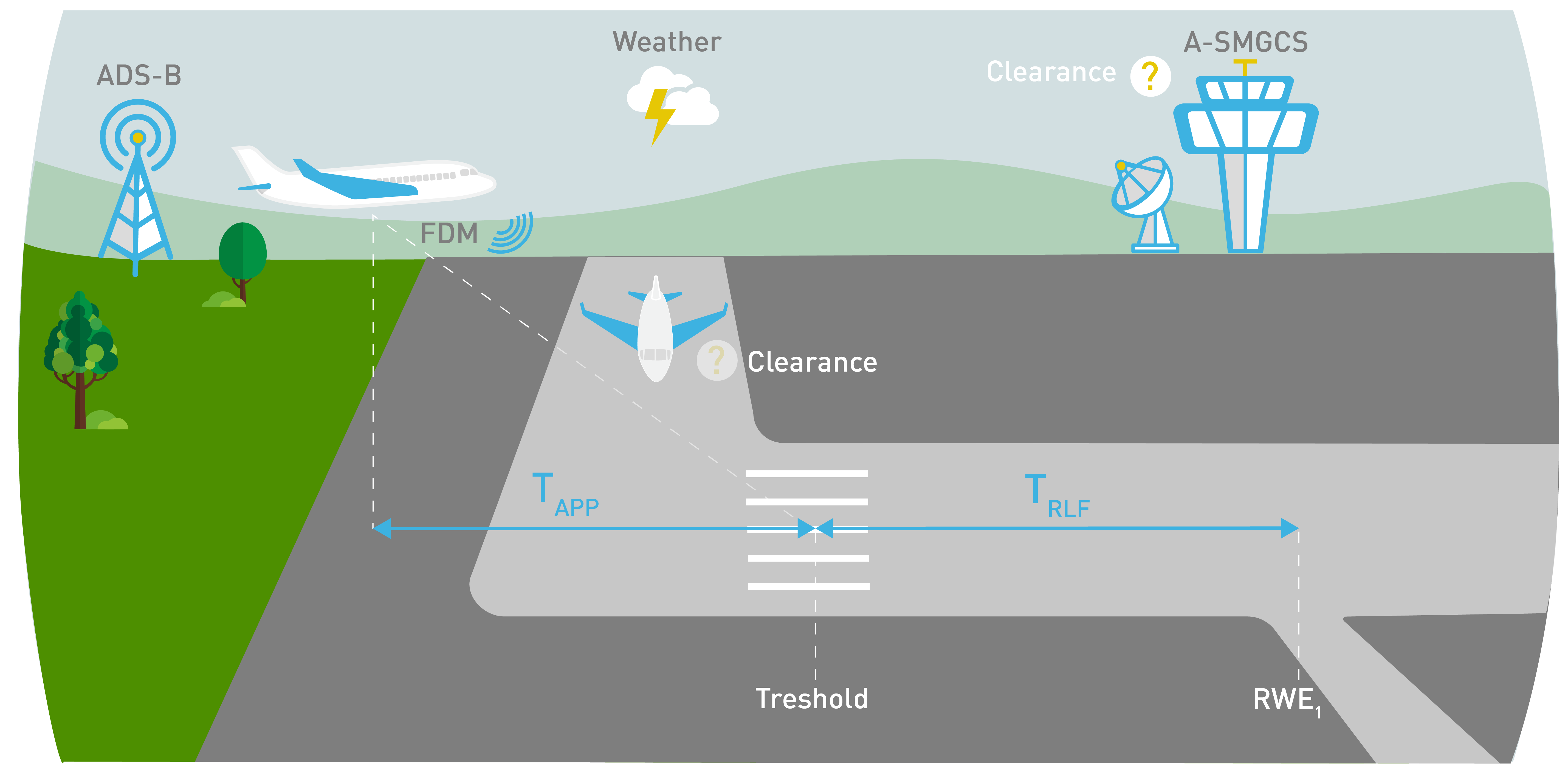

As an example, let’s take a look at a machine learning model we developed in a H2020 project called Safeclouds.eu. The model tries to accurately predict the arrivals runway occupancy time (AROT) at a distance of 2NM from the runway threshold:

- We were given a vast dataset, labelled by known AROTs, which contained operations on a given runway (R34) of a given airport (Vienna airport, LOWW). The dataset is composed of different data sources (radar tracks, flight plans, weather information, etc.).

- We used this data to train a machine learning model. The model generalised very well with unseen data points belonging to that domain, i.e. R34 of LOWW airport. Let’s refer to this prediction task as T1.

- Suppose that we then wanted to apply our trained model to the arriving operations on another runway of another airport, e.g. runway 25R on Barcelona airport, LEBL. Let’s refer to this new task as T2 in a new domain.

- In theory, we should have been able to just apply the trained model for T1, but a vast performance degradation would have been observed with the new predictions. This is a well-known problem in machine learning: when the domain is changed, we introduce bias to the model.

- In this scenario, we also would have noticed that we had way less data for T2 than for T1. In training another model, we wouldn’t have had enough samples for a strong performance on the predictions.

- As a result, only one solution would have remained: Apply Transfer Learning to “re-train” our T1 model with the new data available for T2.

By following this methodology, we could have generalised our predictive model to every airport world-wide!

The challenges of transfer learning and how to deal with them

In reality, the idea of using your pre-trained models for new tasks/domains is not that easy to apply. For example, if two domains are different, they may have different feature spacesor different marginal distributions. This means that variables that describe your new study case may not be the same.

Also, when two tasks are different, they may present different label spaces or different conditional distributions. This can mean that new, unseen cases may appear and your model may focus on detecting imbalanced cases (e.g. new situations that rarely occur).

In order to tackle these problems beforehand, we need to answer some questions:

- What do we need to transfer?: We need to identify which aspects of the model knowledge are relevant for the new case study. Normally, this involves assessing what the source and target have in common. Are the features the same? Is the target variable in a similar distribution? Are there new classes?

- When is it safe to transfer?: In some scenarios, transfer learning is not only inviable, but could make your model worse (known as negative transfer). We need to carefully assess both scenarios and double-check with domain experts from both cases.

- How we should transfer?: Once we are sure what we need to transfer and if it is viable, we have to identify the correct methodology for transferring knowledge across domains/tasks. It is important to know which existing algorithms and techniques are applicable. Don’t worry, we will look at some transfer learning algorithms in my next post.

Conclusions & future posts

Transfer Learning is by far the most promising enabler for machine learning as a mainstream product. The industry would probably need to adopt it in order to provide reliable solutions that can be quickly prototyped. I personally think this is going to be a key methodology in a certain future. For example, in recent years, libraries of pre-trained models for computer vision or speech recognition have already popped up (Google BERT, PyTorch’s torchvision, Tensorflow models, fastAI, etc.).

In future posts, we will take a look at existing methodologies and algorithms for transfer learning, as well as some successful use cases of transfer learning from well-known machine learning players. Stay tuned!