When one observes the numerous applications of data science in many scientific and technological fields, most (if not all) are based on the reconstruction of a single model. This seems prima facie normal; for instance, if we want to classify individuals as healthy subjects or patients, then we would have one single model to provide a reliable diagnostic. There is yet another possibility: construct a set of models, each one based on different premises, to retrieve a wide range of possible answers. In the following text, we will explore the advantages (and challenges) of doing this.

There can be different models yielding different results. At first one may think that this is due to the classification models themselves, which are implicitly based on some assumptions of the underlying data. For instance:

- A linear-kernel SVM expects the two classes of subjects to be separated by a line, or more generally by a hyperplane;

- A KNN model expects information to be encoded in the class of neighbouring instances;

- An ANN model is quite general, expecting only to find a non-linear relationship between input features and output classes.

As one can see, using different models may yield different results; as a linear SVM may not be able to find any useful model and an ANN may produce superb results.

The way data are initially processed is nevertheless another source of variability, which is usually disregarded. Each researcher has their own way of determining extreme events, and whether it is necessary to filter them. Researchers may synthesise different high-level features, which may not coincide. Different researchers may also have different view on the final business application, as one may favour a fast yet slightly unreliable model, while another may favour maximum reliability and disregard the computational cost of the solution. Considering this, the assessment of the statistical significance of results may differ. The two research groups may therefore reach quite different models, even when the underlying algorithm, be it a SVM or an ANN, is the same.

Is this a real issue, that is, something that we find in real-world problems? Or is just a theoretical problem? A recently published paper in Nature (1) discusses the importance of this aspect of data science. It reports an experiment, in which 29 research groups were asked to assess whether football referees are more likely to give red cards to players with dark skin than to players with light skin. In spite of building upon the same data set, results were extremely heterogeneous: from no bias detected to a x3 bias!

How can we deal with this issue? From a personal perspective, I would ask: should we really deal with it? Clearly, the standard approach would entail considering that there is only one single true model, i.e. the model that is driving the behaviour we are observing; and thus we should be able to reconstruct an exact synthetic model, that is to say, all synthesised models should converge towards the real one. The reader will recognise this to be the equivalent of a frequentist approach (2). A more Bayesian mind would nevertheless admit that there may not be a universal truth, and that all models are somehow correct; at least in that we can learn from each of them.

The question should then be how can this multi-analysis approach be implemented in real data science projects? Below is my personal proposal, with the hope of sparking further discussion and refinements:

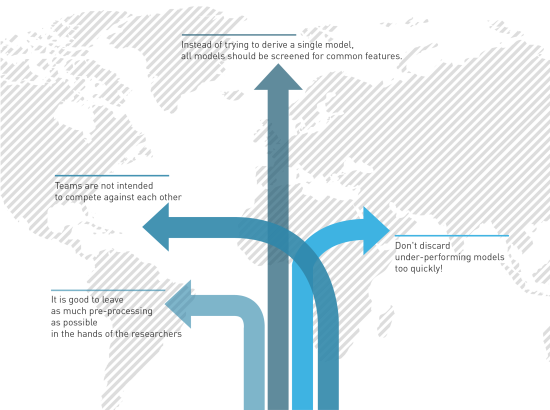

- Allow different research teams to work independently on the data, with the following clarifications. First, while the studied data set must be the same, it is good to leave as much pre-processing as possible in the hands of the researchers, in order to assess whether different views can emerge. Second, teams are not intended to compete against each other as there will be no winner at the end, just different points of view. Admittedly, it may be difficult to convince people to let their egos aside!

- Instead of trying to derive a single model, all models should be screened for common features. For instance, a single feature may prominent in each, and such feature may then be recognised as a truly key element, i.e. something that is true independently on the point of view.

- Finally, don’t discard under-performing models too quickly! While they may not be useful for the final business application, they can still teach us a lot, especially in terms of what has gone wrong. Remember the previous example: the fact that a SVM model underperforms with respect to an ANN one may be indicative of a presence of a non-linear relationship, and this information can be used to discard the use of linear models.