The case for digital assistants in ATM – Proposal for a framework to build AI tools that humans can engage with

David Perez

For several years now, we have championed digital assistants for ATC—in fact, we presented our first version of Victor5 last October 2021 during the World ATM Congress. We first heard somebody referring to “AI-powered digital assistants” at the SESAR Innovation Days 2020, when Marc Baumgartner mentioned the concept in his keynote speech (together with many other interesting ideas). We are presenting our next version of Victor5 at the World ATM Congress 2022 this week and we are very excited about all the functionalities that we are building into our digital air traffic control twin (Level5) that runs on our powerful MLOps architecture (BeSt).

In the meantime, we also have been working on putting together a framework that addresses the human factors challenges of incorporating AI in digital assistants. In order to do this, we needed to collaborate on a significant ad-hoc project with the top institutions in Europe working on AI and Human Factors, engaging both users and authorities. We are very happy to present SafeTeam, a collaborative project recently approved as the top-ranked evaluated proposal in one of the latest Horizon Europe calls. The project will run from September 2022 for three years and will leverage all the work that we have done in the last several years, together with the great expertise of this leading team in AI and HF.

We are very happy to be leading this interesting area in ATC and we are looking forward to building the necessary tools for the introduction of AI in ATM in the coming years. As an introduction, we would like to explain what we mean by AI-assistance tools and Human Factors framework that can take AI into operations.

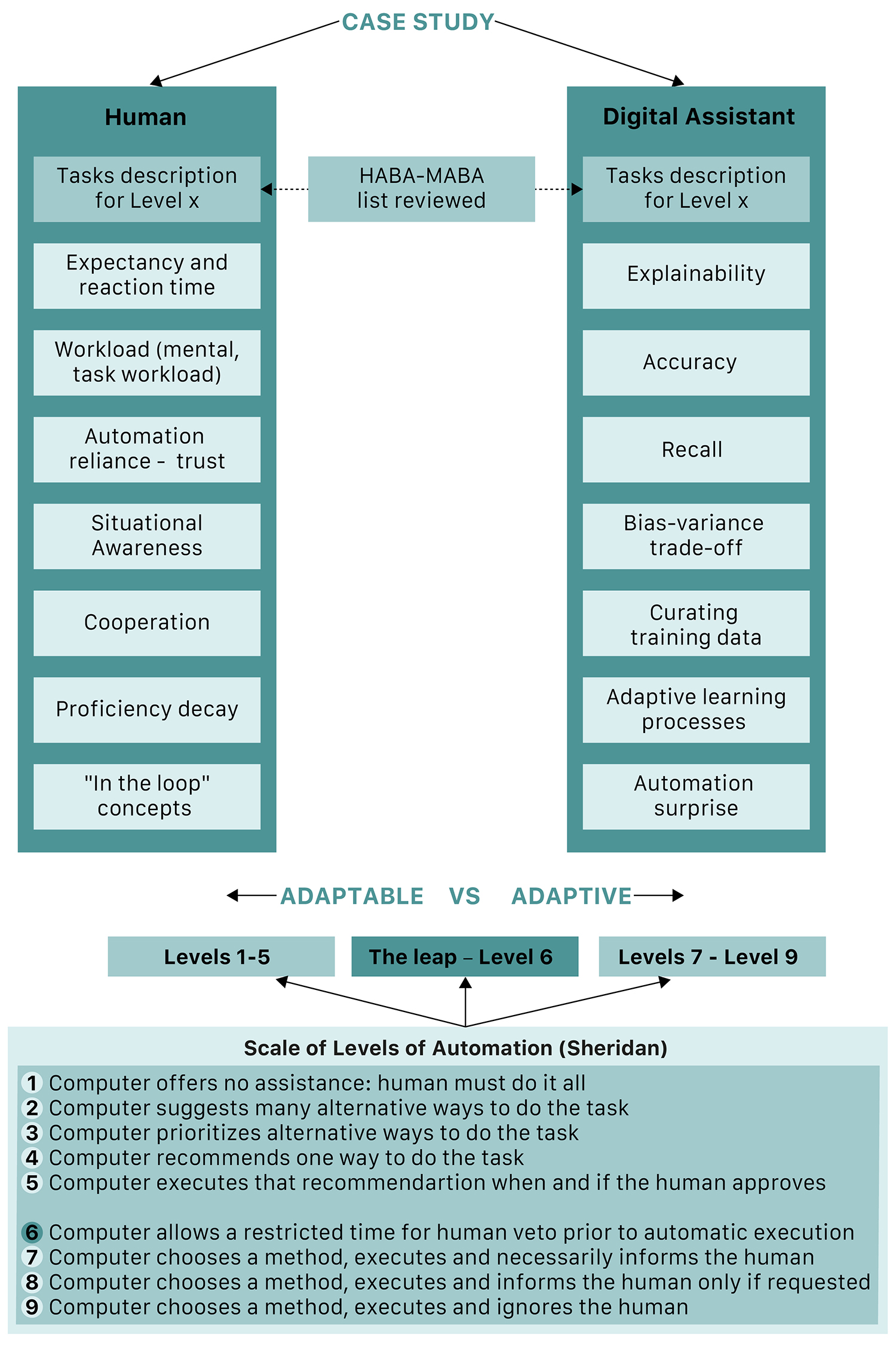

In 1951, the psychologist Paul Fitts proposed a distribution of tasks between humans and machines, distinguishing the strengths of each. The Fitts list, known as HABA-MABA (Humans Are Better At vs. Machines Are Better At) was visionary in outlining the human factors in the research into automation; it emphasised concepts like explainability (or knowledge of automation logic), trust, levels of automation (adaptive vs. adaptable control) and transition, human in the loop and automation surprise (Sheridan, 2002, 2014). The Fitts list remains a valid guide to understanding the collective transition to automation over the past 70 years—which, put into consideration, has been a truly remarkable pace of adaptation (de Winter, 2014).

The same year that Fitts developed his list, the first working AI system ran a checkers-playing program and a chess-playing program from a computer at the University of Manchester. Five years later, in 1956, the field of AI research was founded at a workshop held on the campus of Dartmouth College (Dartmouth Summer Research Project on Artificial Intelligence, 1956). However, since these pivotal projects and the establishment of AI research, automation of industry applications has taken longer to develop.

In the last three decades, techniques based on training algorithms with historical datasets have achieved unprecedented improvement. The combination of new techniques with the increase in computer power and availability of scalable storage systems, along with the increase in complexity of aviation procedures and inherent safety requirements, has made us completely rethink workload and automation in aviation.

Today we are on the brink of a groundbreaking evolution in aviation—one that embraces AI and digitalisation. Big data and cloud computing have paved the way for the processing of massive historical datasets, and these same datasets can now train AI algorithms to support operational personnel, including aircraft crew and air traffic controllers, by identifying safety-critical scenarios and providing operators with the necessary assistance to act. Higher degrees of automation are, for the first time, being envisioned by the industry in the long term—full-automation concepts have even been proposed.

In the coming years, AI digital assistants will redefine workload and capacity in air traffic control operations, leading to a step change in automation and performance. Air Traffic Control Systems are on the brink of a groundbreaking evolution—one that embraces AI and digitalisation despite the constraints of current Controller Working Position technology.

Big data and cloud computing have paved the way for the processing of massive historical datasets, and these same datasets can now train AI algorithms to support air traffic controllers by confidently identifying conflicts and non-conflicting traffic. New AI decision support tools will provide controllers and supervisors alike with advance warning of complex traffic scenarios, proposing separation instructions that ensure safety and optimise aircraft trajectories whilst minimising capacity restrictions and emissions.

DataBeacon, in cooperation with several Air Navigation Service Providers, is developing a methodology to analyse aspects of human performance that are relevant to the introduction of digital assistants in the air traffic control operations room. In parallel, DataBeacon experts are looking into the different aspects of AI technology that relate to interactions with humans. A “reviewed HABA-MABA” list will rely on the visionary work done by Fitts and will be updated with new developments in AI over the last decades.

A preliminary list of the aspects that will be reviewed on the human or HABA side includes the following:

- Task description for the target level of automation: For each target level of automation, a new description of the tasks for the human and for the machine needs to be implemented, as automation changes the nature of the tasks’ descriptions.

- Expectancy and reaction time: Ability of an operator to respond to control signals, to react smoothly and precisely. Reaction time will be one of the KPIs.

- Workload, including mental and task overload: Workload needs to be compared to manual tasks, as the cognitive cost for an operator to store and process very large amounts of information for long periods and to recall relevant facts at the appropriate time could impact the workload by increasing it, despite the introduction of new tools.

- Automation reliance and trust: Trust describes to what extent an operator relies on an automatic control system. Trust has a strong influence in the use (and misuse) of automation in complex situations. Lack of trust or too much trust can lead to an inappropriate use of automation.

- Situational awareness: Ability to accurately detect and perceive all factors and conditions that may affect a certain situation.

- Cooperation: Corresponds to a process whereby two or more agents (human or system) work together towards the attainment of a mutual or complementary goals. Aviation professionals are often confronted with human-system cooperation. This human-system interaction includes design and evaluation aspects that must be taken into account to ensure that different agents perform their assigned roles optimally, including through better adaptability of shared functions between humans and systems.

- Proficiency decay: Due to a period of reduced or paused activity (such as Covid-19) operators’ skills degrade, which may lead to time management issues, reduced situational awareness and the inability to keep ahead of situations (EASA, 2021).

- In-the-loop concepts: Referring to a number of approaches that need to be taken into account to ensure human operators are kept up to date and engaged with the factors that are important when making decisions.

- Automation surprise: This refers to situations where the operator is surprised by the behaviour of the automation. “Automation surprises” are a direct instantiation of the difficulties in understanding automation and can result in an unsafe event (for example, difficulties in take-over of control situations). There is a need to avoid such “automation surprise” response by allowing fallback/seamless hand-over to the operator in case of severe system perturbations or unexpected output.

The following preliminary list of aspects is proposed for review on the machine or MABA side (in our case the digital assistant). Other may be added moving forward:

- Explainability: The easiness and transparency with which a digital assistant presents the results of system-determined prediction so that human operators will understand and trust decisions.

- Accuracy: Ability of digital assistant to correctly predict outcomes out of all the possible results.

- Recall: Ability of a digital assistant to store very large amounts of information for long periods and to recall relevant facts at the appropriate time.

- Bias-variance trade-off: Digital assistants based on machine learning techniques need to balance between simplifying assumptions made by a model and being able to generalise well enough so the model remains accurate when fed with new data that may differ from the training dataset.

- Curating a training dataset: The process by which a digital assistant collects, organises, labels and cleans aviation data to ensure it is of value to an operator.

- Adaptive learning processes: This refers to the capacity of a digital assistant to adapt and provide personalised learning to each operator. It is a technique which aims to provide efficient, effective, and customised solutions to each role.

This methodology will be applied to several case studies on how ATCOs will use digital assistants in different positions in the operations room, including watch supervisors, planner or executive controllers.