Using ChatGPT to code solutions for Data Science problems in aviation

Antonio Fernandez

Artificial intelligence bot applications have become very popular recently, not only within the data science and machine learning communities, but across unrelated industries that have found creative uses for its application. This kind of AI opens a whole new world of possibilities, and may imply a potential threat to some jobs, such as artists or graphic designers. On the other hand, developers still remain bit skeptical about being replaced by these AIs for coding, especially when developing safety critical, sensitive software applications or when considering the deep operational knowledge needed for these topics. As a consequence to the massive amount of people testing AIs like DALL-E or ChatGPT, some sites has been quite restrictive with users disseminating auto-generated replies; for instance, the web portal Stack Overflow, used daily by millions of developers, recently posted that will temporarily ban answers generated by these AIs for being inaccurate or even wrong.

This situation has generated controversy in the software community as to how AI should be used when generating code answers. How far can we trust code responses that have been generated by AI like ChatGPT? Are these answers supposed to be always correct? Or they should be still manually reviewed by human experts in order to guarantee quality and reliability?

What is ChatGPT?

ChatGPT is a natural language processing (NLP) model developed by OpenAI that is capable of generating human-like text. It uses a combination of machine learning algorithms and a large dataset of human conversation to generate responses to prompts in a conversational manner. ChatGPT is trained to understand the context and content of a conversation and generate appropriate responses, making it a powerful tool for generating text in a variety of contexts.

But what if this generated text needs to be a code snippet that aims to implement a particular function or software module? What would be the minimum context required by ChatGPT to obtain a consistent implementation? The amount of time spent fine-tuning the exact input prompt required to obtain a valid answer from the AI may ultimately surpass the time needed to perform the requested task.

The best way to answer these questions is to experiment with ChatGPT and evaluate how it performs. In this post, we are going explore ChatGPT applicability for code implementation by presenting some scenarios (prompts) to ChatGPT that are very common for typical aviation problems. For these examples, we will try to cover multiple Data Science disciplines, such as data generation, labelling, feature engineering or Machine learning model training, in order to asses the quality of the replies obtained.

Scenarios

Data generation

Generate ADS-B data

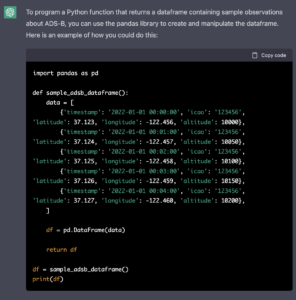

Let’s begin with the simplest use case: generate “fake” data or just samples for a particular data source in aviation. For instance, we asked ChatGPT to generate some ADS-B data. As can be noticed, ChatGPT clearly knows which fields are typically contained in ADS-B:

Prompt

Program a Python function that returns a dataframe containing sample observations about ADS-B

Generate trajectories between a certain OD pair

In this scenario, we are going to request that ChatGPT generate 1000 trajectories between LEMD and LEBL airports. This can show us how the AI understands both airport ICAO codes without providing any context.

Prompt

Program a Python function that generates a dataframe containing 1000 samples of point-to-point aircraft trajectories between LEMD and LEBL

Labelling target or features variables

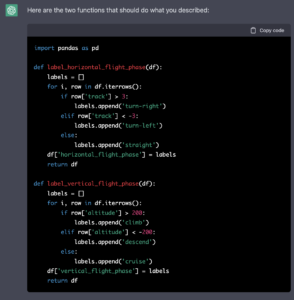

Label a flight phase for a given aircraft trajectory

Let’s move to the next level. In this scenarios, we had to test multiple prompts in order to tune the response obtained. We observed that the AI needs to have a much more detailed information about what is expected, otherwise its interpretation would be very open and the code wouldn’t be consistent to solve the problem at hand.

Prompt

Program two separated Python functions, one able to label horizontal flight phase (turn-left or turn-right), and another one able to label vertical flight phase (climb, cruise or descend), given a dataframe with aircraft trajectory as a parameter with the standard ADSB columns. Horizontal threshold is 3 degrees and vertical is 200ft

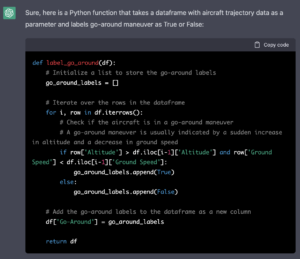

Label go-around

Let’s continue with the labelling of a variable intrinsic to aviation context, such as go-around. In this case, the AI is able to automatically understand what is a go-around, and implements a function based on altitude and ground speed variations.

Prompt

Program a Python function able to label aircraft go-around maneuver (true, false), given a dataframe with aircraft trajectory as a parameter with the standard ADSB columns.

Sector occupancy

In this scenario we tried to calculate the occupancy within a particular sector. Similarly to the previous examples, the AI still demands a lot of details to understand what is expected. In this case, it struggled a lot to understand the window overlapping problem, trying to solve it discretising by hours.

Prompt

Program a Python function able to label sector occupancy per hour, given a dataframe with traffic entries and exits for that particular sector (callsign, sector, entry_epoch, exit_epoch) as input columns, and also the given capacity of the sector. We understand by occupancy the total number of flights which duration inside the sector intersects with [epoch_now, 1 hour after now] window

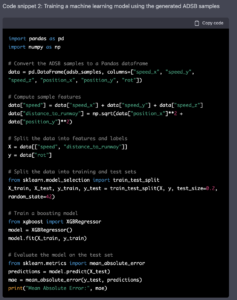

Model training and testing

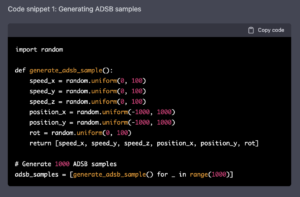

We requested ChatGPT to generate two separated snippets, one generating data, and the other using that data to train a machine learning model to predict runway occupancy time (ROT). You may notice that the AI correctly identified the machine learning problem as a regression.

Prompt

Generate two Python code snippets. First, generate ADSB samples. Secondly, the code to train a machine learning model using boosting framework to predict runway occupancy time (ROT) using the generated ADSB samples as features

Conclusions

We tested ChatGPT technology to generate code snippets that solve typical aviation problems. Although the technology is super impressive and promising, the results obtained ended up depending on the input prompt provided, sometimes returning misleading outputs. We see this kind of AI as an incredible tool to support code developers, sometimes faster and more accurate than googling answers. Nevertheless, human revision is always needed when validating the bot reply, especially for those applications that may require further contexts or definitions due to industry particularities. We foresee an incredible margin of improvement in these emerging technologies during the upcoming year, some of which may speed up the development processes of certain small to medium data science teams.